There Was an Error Loading Log Streams. Please Try Again by Refreshing This Page. Cloudwatch

AWS CloudWatch— Error Alerts!

Having managed services for every problem we want to solve is such a approval, simply what if there are more than required managed services for our problem?

Taking the right approach because the price and benefits becomes the side by side challenging attribute for a detailed analysis.

Error alerts add together such a convenience to the whole monitoring setup. Nosotros can merely set the alerts and keep an middle on our preferred notification platform for any metric hemorrhage, and boom! In that location we're deciphering the trouble every bit soon we're notified.

Lately, nosotros had to fix a receive error alerts on mattermost (an open-source, self-hostable online chat service with file sharing, search, and integrations) for a particular log group on CloudWatch.

The result sounds straightforward, correct? We can accept SNS (a fully managed messaging service by AWS) and voila, what's the problem then?

The problem is choosing betwixt the plethora of services we accept in AWS.

POSSIBLE SOLUTIONS:

Let'south interruption down the problem first, so in that location are

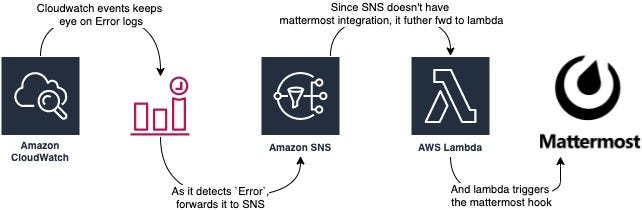

Since this issue requires filtering out the logs with the keyword "ERROR" peculiarly, and so there has to be a metric warning or CloudWatch Event to perceive the mistake as and when occurred, so since we have to send notifications, and so there has to be an SNS kinda service to send notifications, but since my employ-case was very specific to an open-source tool and SNS doesn't back up sending notifications directly to thing well-nigh, I needed to have a trigger-based solution in place to forward the notifications using API calls.

Before digging in, permit'southward see how nosotros're charged for lambda as that'south the mutual gene

These are the 3 factors that decide the toll of your Lambda part:

- The number of executions. Y'all pay by each execution ($2E-7 per execution, or $0.20 per meg)

- Elapsing of each execution. The longer your function takes to execute, the more y'all pay. This is a good incentive to write efficient application code that runs fast. Yous become charged in 100ms increments and there is a maximum function timeout of 5 minutes.

- Memory allocated to the function. When you create a Lambda function, you lot allocate an amount of retentivity to it, which ranges between 128MB and i,536MB. If you allocate 512MB of memory to your function simply each execution-only uses 10MB, you lot pay for the whole 512MB. If your execution needs more than memory than the one allocated to the part, the execution will fail. This ways you have to configure the corporeality of retentiveness that will guarantee successful executions while avoiding excessive over-allocation.

- Information transfer. You pay standard EC2 rates for data transfer (out to the net, inter-region, intra-region).

So a possible solution could be

CloudWatch logs > CloudWatch events/metric warning > SNS > Lambda subscription

But await, looks like too much effort just for having fault notifications. And so practice I, and that'due south how I landed on the Lambda subscription filter. CloudWatch Logs subscription filter sends log data to your AWS Lambda function based on the selected regex, AKA filter you've put. This approach boils down the endeavor to:

Cloudwatch logs > Lambda subscription filter

Information technology's not just less on efforts but also adds a lot of value to the cost perspective for our intent behind this practice.

Execution

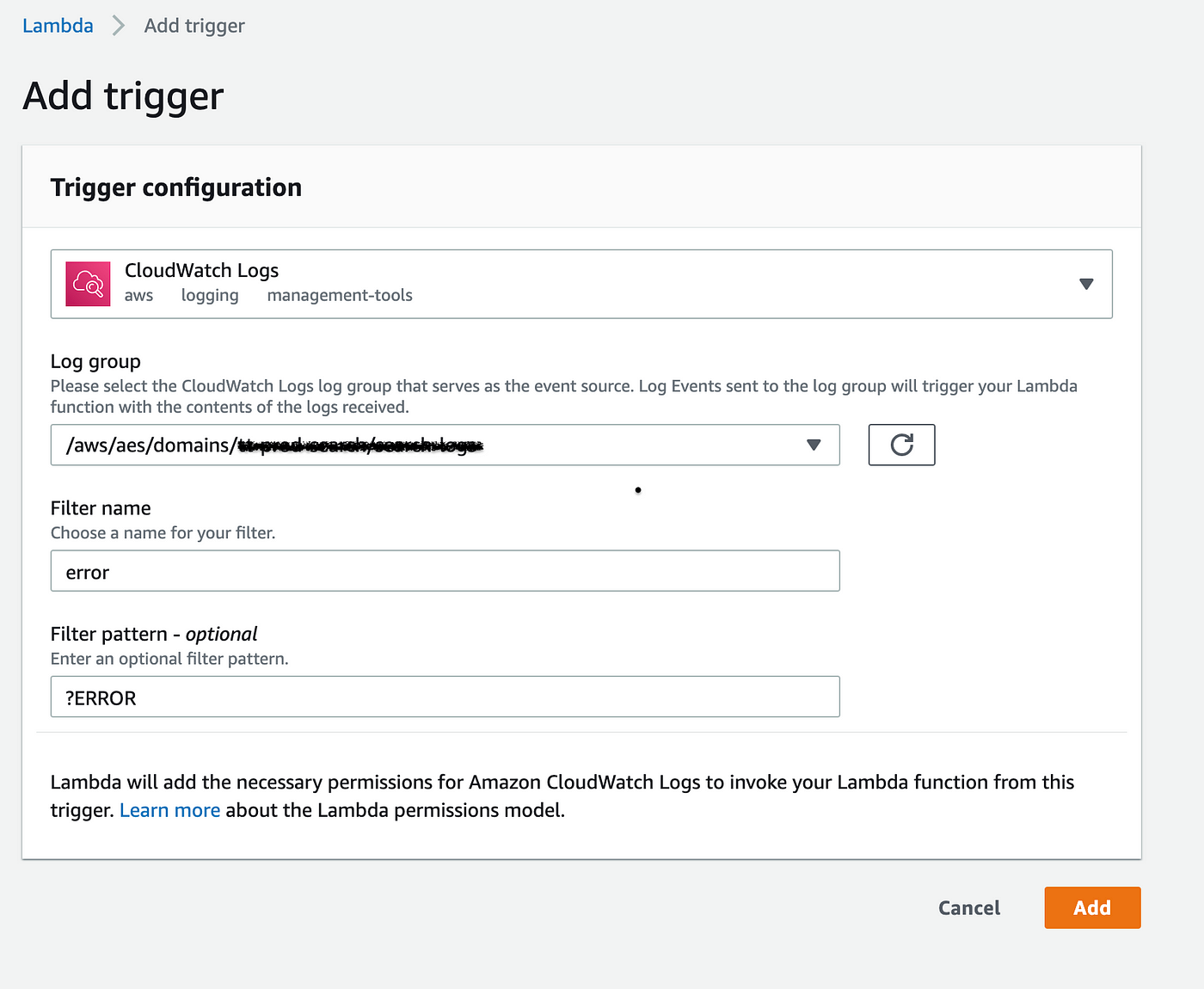

As we accept outlined the steps required, next is to configure the lambda for the same, nigh of the options are intuitive, attaching a screenshot for the reference:

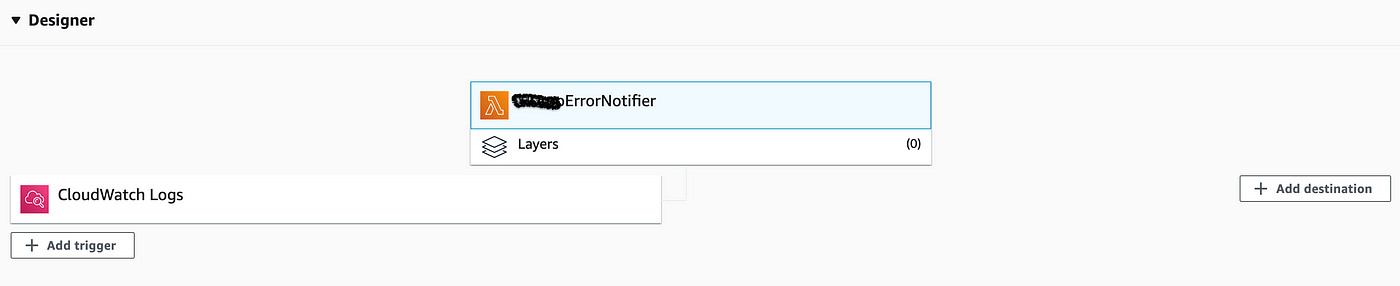

This is going to human activity as a trigger, and so whenever at that place is a cloud watch log with the keyword Error, it would trigger this lambda function which in turn would send notifications. This how the lambda designer looks:

Please annotation that no destination is required here since lambda is calling endpoints, incase you want to configure SNS or SES, the destination is going to be appropriately.

Sharing the lawmaking merely in case it helps y'all:

from __future__ import print_function

import json

import urllib3

import zlib

# from datetime import datetime

from base64 import b64decode print('Loading function') def decode(information):

compressed_payload = b64decode(data)

json_payload = zlib.decompress(compressed_payload, xvi+zlib.MAX_WBITS)

return json.loads(json_payload) def getLogs(event, context):

# Fetching the logs

awsLogs = result.get('awslogs')

# AWS past default shares the cloudwatch logs in decoded format, fetching the master encoded chunk out of it.

encodedLogs = awsLogs['data']

# Decoding the logs, this returns every bit whole JSON information

logs = decode(encodedLogs)

# Fetching the master details that need to be shared

logGroup = logs['logGroup']

logStream = logs['logStream']

# timestamp = logs['logEvents'][0]['timestamp']

# timestamp = 1604778760

# time = datetime.fromtimestamp(timestamp)

message = logs['logEvents'][0]['message']

# Creating a payload with "text" key for sending notification

payload = {

"text": "Mistake detected for the log group: " + logGroup + " \nLog Stream: " + logStream + " \nMessage: " + (message) + " "

}

return payload def sendMessage(payload):

payloadJSON = json.dumps(payload)

print(payload)

http = urllib3.PoolManager()

r = http.request(

"POST", "https://chat.mattermost.com/hooks/<hook-id>",

body=payloadJSON,

headers={'Content-Type': 'application/json'})

return r.data# Entry bespeak for lambda

def lambda_handler(consequence, context):

payload = getLogs(event, context)

sendMessage(payload)

PS: Y'all'd only exist able to generate mattermost channel hook if you have admin permissions (perks of being in DevOps :P). The aqueduct hooks are present nether settings > integrations option.

Cheers for reading. Promise I was able to add together some value to your debugging practices.

Source: https://towardsaws.com/aws-cloudwatch-error-alerts-450e7017198d

0 Response to "There Was an Error Loading Log Streams. Please Try Again by Refreshing This Page. Cloudwatch"

Enregistrer un commentaire